Tuning Considerations

There are various strategies available to tune integrations, and include:

SQL Mapping

The SQL Mapping feature is available to use for complex mapping requirements, and also may be used to replace multiple wildcard * to * mapping rules with a single pass of the database.

Each type of mapping uses resources differently, and the mapping performance is in the following order, where Explicit is the fastest, and Multi-Dim is the slowest:

- EXPLICIT

- IN

- BETWEEN and LIKE

- MULTI-DIM

Multi-dim mappings are the slowest mapping, and try to limit multi-dim rules for complex use cases where you need to use a combination of EXPLICIT and LIKE mapping. For example, ENTITY = 100 AND ACCOUNT LIKE 4*.

As an additional tuning strategy, you may be able to replace multi-dim mappings with explicit mappings by combining source dimensions. For example if ENTITY=100 AND ACCOUNT=4100 you can concatenate ENTITY and ACCOUNT as the source, and define an EXPLICIT mapping for 100-4000.

Expressions

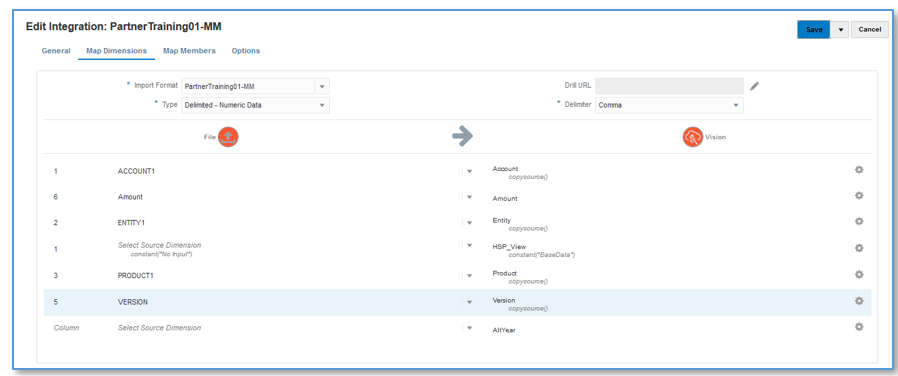

Expressions may also be used instead of mapping rules, and this technique also helps improve performance. To replace the * to * "like" mapping rules, the CopySource expression may be used, and looks like the following:

This expression does the same thing as the * to * mapping, and it is applied during import, rather than via a scan of the table with a SQL statement. The performance of expressions is roughly the same as using a single SQL mapping rule, but it is recommended to use expressions when data volume is large so mapping does not fail because of database governor limits. (Expressions are processed during the import step of the load process.)

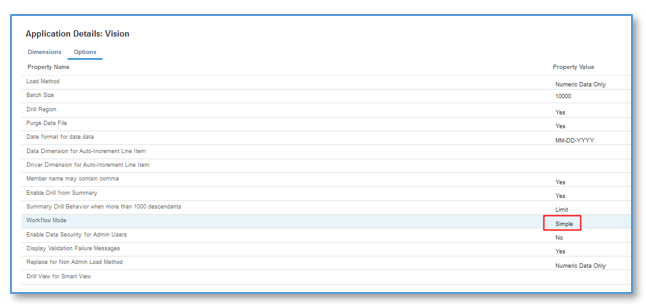

Simple Workflow Mode

With the Simple Workflow mode, the TDATASEG table is bypassed, and data is loaded directly to the target. This technique eliminates the copy of data to TDATASEG, and also the delete from TDATASEG. The only caveat is that drill-through to the Data Integration landing page is unavailable. (Drill-through using direct drill is available.)

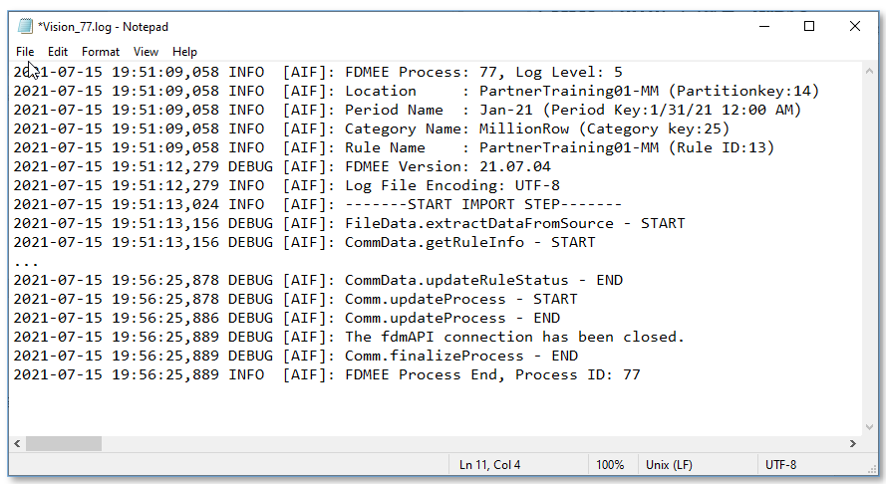

Using this simple workflow mode with expressions, the entire load process took 5 minutes and 16 seconds:

Quick Mode

Quick mode should be considered for high volume data loads that do not require complex transformations. Quick mode by-passes most of the steps and database tables in the workflow process, but does support expressions for simple transformations. As a rough benchmark, Quick mode is able to load approximately 1,000,000 rows per minute to the target application. Users are able to use the direct-drill feature even in Quick mode, and by-pass the Data Integration landing page when drilling.

Additional Considerations

When defining integrations, the Workflow Mode, and load method directly impact the performance of the load based on the specific data volume. When loading up to about 500,000 source records/rows, any workflow mode is recommended when using the load method of "Numeric Data Only."

When using the load method of "All Data with Security," expect that the data load takes longer because each row is validated against the target application in regard to any user defined security.

When loading files over 1,000,000 rows, the system performs batch updates and deletions from the TDATASEG_T and TDATASEG tables based on the "Batch Size" setting in the Target Options (see Defining Target Options). In some cases, files over 1,000,000 rows may be split into files each with less than 1,000,000 rows, and this usually results in a performance improvement. Users may then create multiple integrations, one for each file, and then combine these integrations into a batch, running the batch in parallel mode to maintain the performance achieved by splitting the file. This provides a single execution point, that kicks off multiple rules for the split file.

The following table provides recommendations in regard to workflow mode, load method and data volume.

Table A-1 Recommend Workflow Mode, Load Method, and Data Volume

| Workflow Mode | Load Method | Row Count |

|---|---|---|

Full Workflow | Numeric Data Only | Up to about 3 million rows |

Simple Workflow | Numeric Data Only | Up to about 4-5 million rows |

Full Workflow | Admin User All Data with Security Validate Data for Admin = Yes | Less than 500,000 rows |

Full Workflow | Admin User All Data with Security Validate Data for Admin = No (This loads to the target using the Outline Load Utility) | Up to about 3 million rows |

Quick Mode | Numeric Data Only | Any row count |

Quick Mode Validate Data for Admin = Yes is not supported. | Admin User All Data with Security Validate Data for Admin = No (This loads to the target using the Outline Load Utility) | Any row count |

Note:

Tuning integrations is somewhat of an art, and the same techniques may not be applicable in all cases. Tuning usually requires multiple iterations to get to a final solution, and time should be included in all implementations to address tuning.

Oracle provided these in below link

Tuning Considerations (oracle.com)